Facial Aesthetics in Artificial Intelligence: First Investigation Comparing Results in a Generative AI Study

© 2024 HMP Global. All Rights Reserved.

Any views and opinions expressed are those of the author(s) and/or participants and do not necessarily reflect the views, policy, or position of ePlasty or HMP Global, their employees, and affiliates.

Abstract

Background: Patients undergoing facial plastic surgery are increasingly using artificial intelligence (AI) to visualize expected postoperative results. However, AI training models’ variations and lack of proper surgical photography in training sets may result in inaccurate simulations and unrealistic patient expectations. This study aimed to determine if AI-generated images can deliver realistic expectations and be useful in a surgical context.

Methods: The authors used AI platforms Midjourney (Midjourney, Inc), Leonardo (Canva), and Stable Diffusion (Stability AI) to generate otoplasty, genioplasty, rhinoplasty, and platysmaplasty images. Board-certified plastic surgeons and residents assessed these images based on 11 metrics that were grouped into 2 criteria: realism and clinical value. Analysis of variance and Tukey Honestly Significant Difference post-hoc analysis tests were used for data analysis.

Results: Performance for each metric was reported as mean ± SD. Midjourney outperformed Stable Diffusion significantly in realism (3.57 ± 0.58 vs 2.90 ± 0.65; P < .01), while no significant differences in clinical value were observed between the AI models (P = .38). Leonardo outperformed Stable Diffusion significantly in size and volume accuracy (3.83 ± 0.24 vs 3.00 ± 0.36; P = .02). Stable Diffusion underperformed significantly in anatomical correctness, age simulation, and texture mapping (most P values were less than .01). All 3 AI models consistently underperformed in healing and scarring prediction. The uncanny valley effect was also observed by the evaluators.

Conclusions: Certain AI models outperformed others in generating the images, with evaluator opinions varying on their realism and clinical value. Some images reasonably depicted the target area and the expected outcome; however, many images displayed inappropriate postsurgical outcomes or provoked the uncanny valley effect with their lack of realism. The authors stress the need for AI improvement to produce better pre- and postoperative images, and plan for further research comparing AI-generated visuals with actual surgical results.

Introduction

Recently, artificial intelligence (AI) has been pioneering the integration of technology with many professional fields, pushing limits and reshaping what is achievable.1,2 The uses of this technology span from simplifying routine procedures to executing intricate operations that demand accuracy and specialized knowledge.1-3 For example, AI is revolutionizing health care by improving the identification of cancerous lesions in the breast, head, and neck.4-7 Since the beginning of 2023, there has been a significant increase in the number of scientific publications on the topic of AI, particularly in the field of medicine.8-11

Despite the widespread popularity of text-generation models like ChatGPT (OpenAI) and Bard (Google AI), there has been little to no emphasis on verifying or documenting the context behind image generation, particularly in the medical or scientific field. Thus far, there is a lack of articles in the medical literature that discuss the use of AI technologies to generate images based on specific medical prompts and demands. The potential impact of this possibility is significant, particularly when considering the availability of influential and generally accessible tools such as Midjourney (Midjourney, Inc), Leonardo (Canva), and Stable Diffusion (Stability AI). Furthermore, the photos created by these tools are both original and unrestricted by copyright, thereby resolving the widespread issue of copyright infringement that hampers global article production.12 In addition, the high level of accessibility of these tools enables anyone worldwide to generate photos with ease. 13

An example of AI's application in the medical industry is its use in visualizing human anatomy for facial cosmetic surgery. Facial aesthetic surgery primarily depends on a comprehensive understanding of the various anatomical elements of the human body, encompassing nerves, muscles, bones, and vascularization.14,15 The depiction of these structures is essential for the purpose of planning surgeries, teaching patients, and training future specialists in the area. Historically, this has been accomplished by means of artistic representation, medical imaging techniques such as MRI and CT scans, and meticulously selected images in medical textbooks.16

AI-powered tools such as Midjourney, Leonardo, and Stable Diffusion have the potential to change the field of surgical representation by creating lifelike and intricately detailed images. It should be noted that these AI technologies are not designed for generating medical material, and they come with the necessary disclaimers. Nevertheless, the fact that they are widely available and easy to use raises concerns about their reliability when answering medical or scientific queries. Considering the ease of access and use of these tools, as well as their affordability, it is crucial to verify the accuracy of the data they provide in answer to medical and scientific inquiries. On the other hand, the use of AI-generated images has several potential benefits: AI promises improved efficiency, personalized features, and the capability to produce real and captivating photographs in a matter of seconds.17 These traits might be attractive to educators, researchers, and practitioners in the field of cosmetic surgery and aesthetic medicine.

Nevertheless, the utilization of AI in a domain that requires high levels of delicacy and accuracy gives rise to inquiries and apprehensions. Can AI accurately reproduce intricate and precise pre- and postoperative surgical images? Are these photos reliable for instructional purposes, surgical planning, and scientific research? Inaccurate portrayals or misinterpretations may lead to serious repercussions, ranging from flawed medical instruction to surgical mistakes.

With these questions in mind, the purpose of this study was to examine the efficacy and precision of 3 AI models, Midjourney, Leonardo, and Stable Diffusion, in generating pre- and postoperative surgical photographs for select facial aesthetic surgery procedures, and determine if these models could produce images that were not only visually accurate but also anatomically precise and clinically valuable. To our knowledge, this is the first article that focuses on the practicality and accuracy of AI-powered tools in generating pre- and postoperative facial aesthetic surgical photographs.

Methods

Procedure selection

The study focused on 4 facial surgery procedures: otoplasty, genioplasty, rhinoplasty, and platysmaplasty. These procedures were deliberately chosen to evaluate the AI models across a range of common yet complex facial aesthetic surgeries; they were selected for their clinical significance and their ability to present distinct challenges in AI-image generation, thereby providing a comprehensive assessment of the technology's capabilities. Moreover, each of these procedures addresses unique aesthetic and functional concerns, which are crucial in facial plastic surgery.18-22 For instance, otoplasty involves the reshaping of the ears, a procedure that requires precision in rendering small, intricate structures. Genioplasty and rhinoplasty, on the other hand, involve significant alterations to facial bones and soft tissues, which directly impact facial symmetry and proportion—key factors in both aesthetics and patient satisfaction. Platysmaplasty focuses on the neck region, testing the AI’s ability to simulate changes in skin texture, muscle positioning, and overall contour. The diversity of these procedures also allowed us to rigorously evaluate the AI models' ability to generate images that are not only anatomically accurate but also clinically meaningful across various surgical scenarios. Furthermore, by including procedures that vary in the visibility and complexity of surgical outcomes, we were able to assess the AI's performance in simulating both subtle and significant changes. The selection of these procedures thus ensured a robust evaluation of the AI’s potential utility in facial aesthetic surgery, reflecting its strengths and limitations across a spectrum of common and technically demanding cases.

AI image-generation process

This study utilized 3 advanced AI platforms—Midjourney, Leonardo, and Stable Diffusion—to generate before-and-after images for a series of facial aesthetic surgery procedures. Each platform was chosen for its unique capabilities in rendering high-quality, realistic images based on textual prompts. ChatGPT-4 was employed to craft detailed, surgery-specific prompts that guided the image generation process. The prompts were designed to encapsulate both the typical preoperative appearance and the expected postoperative outcome for each procedure without revealing any real patient-identifiable information, thus ensuring privacy and ethical compliance. Through iterative refinement, these prompts were fine-tuned to ensure the images were surgically relevant and free from extraneous details. The objective was to achieve a more impartial evaluation than one influenced by inconsistencies in prompt design, ensuring the AI's maximum capability in accurately rendering surgical details was assessed under standardized conditions.

Each AI generative adversarial network (GAN) generated a composite image for each of the 4 facial aesthetic procedures. Each composite image included both the preoperative and corresponding postoperative states. This approach resulted in 4 composite images per AI platform, totaling 12 composite images across all platforms. Moreover, each AI platform produced 2-dimensional (2D) pre- and postoperative images based on the provided prompts. These 2D images were chosen for evaluation because they represent the most common format accessible to patients and health care professionals when using these GANs to generate visualizations.

Surgeon evaluation protocol

A panel of surgeons with comprehensive expertise in these types of surgeries, including 2 fellowship-trained craniofacial surgeons, 2 board-certified plastic surgeons, and 2 plastic surgery residents, was convened to assess the AI-generated images. The evaluation was structured around a standardized, 11-metric criteria set that focused on 2 main aspects: realism and clinical value. Realism included 7 of the 11 metrics: size and volume accuracy, anatomical correctness, correct simulation of age, color fidelity, texture mapping, symmetry analysis, and shadow and lighting consistency. Clinical value included the other 4 aspects: pathological feature recognition, postoperative result prediction, surgical relevance, and healing and scarring prediction. Each surgeon independently reviewed the images without knowing which AI models were used in the study. The 11 evaluation criteria were the following: (1) Size and volume accuracy: evaluation of images for precision in depicting expected size and volume. (2) Anatomical correctness: proficiency in producing anatomically accurate visuals. (3) Correct simulation of age: capability to accurately reflect age-related features. (4) Color fidelity: color realism compared to real-life tones. (5) Texture mapping: analysis of AI's effectiveness in mimicking real skin textures. (6) Symmetry analysis: examination of symmetry, crucial for balanced reconstructions. (7) Shadow and lighting consistency: assessment of accurate shadowing and lighting for depth perception. (8) Pathological feature recognition: AI's ability to recognize and depict relevant pathological features. (9) Postoperative result prediction: evaluation of AI's predictive accuracy for postoperative results. (10) Surgical relevance: Determination of image consistency with surgical objectives. (11) Healing and scarring prediction: prediction accuracy regarding scarring and healing post-surgery.

Scoring system

An elaborate scoring system was established to quantitatively assess each evaluation metric. The system allocated numerical scores to each criterion, offering exact quantification. For instance, healing and scarring prediction might be rated on a scale from 1 to 5, with 5 indicating utmost precision.

Statistical analysis

Following expert assessments, we employed the analysis of variance (ANOVA) test for statistical analysis to determine if significant disparities existed in the AI models' effectiveness in terms of realism and clinical value, their performance across each of the 11 metrics, and the aggregate performance of the specific metrics themselves, regardless of the AI model. In instances where the ANOVA revealed notable differences, we advanced to the Tukey Honestly Significant Difference (HSD) post-hoc analysis. This was done to reduce the false positive rate, precisely evaluate the statistical difference of particular comparisons, and pinpoint the AI models or metrics with the most room for improvement.

Ethical considerations

No real patient data or images were used at any point during the research process; thus, this study was exempt from requiring Rutgers New Jersey Medical School institutional review board (IRB) approval.

Results

Images

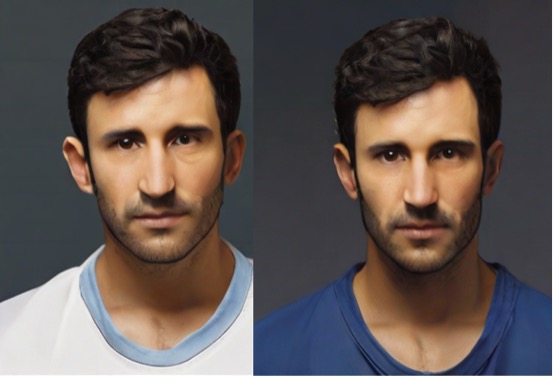

Figures 1, 2, and 3 show the AI-generated pre- and postoperative images for otoplasty, and Figures 4, 5, and 6 show the AI-generated pre- and postoperative images for genioplasty. Figures 7, 8, and 9 show the AI-generated pre- and postoperative images for rhinoplasty, and Figures 10, 11, and 12 show the AI-generated pre- and postoperative images for platysmaplasty.

Figure 1. Pre- and postoperative images of an otoplasty patient generated by Midjourney.

Figure 2. Pre- and postoperative images of an otoplasty patient generated by Leonardo.

Figure 3. Pre- and postoperative images of an otoplasty patient generated by Stable Diffusion.

Figure 4. Pre- and postoperative images of a genioplasty patient generated by Midjourney.

Figure 5. Pre- and postoperative images of a genioplasty patient generated by Leonardo.

Figure 6. Pre- and postoperative images of a genioplasty patient generated by Stable Diffusion.

Figure 7. Pre- and postoperative images of a rhinoplasty patient generated by Midjourney.

Figure 8. Pre- and postoperative images of a rhinoplasty patient generated by Leonardo.

Figure 9. Pre- and postoperative images of a rhinoplasty patient generated by Stable Diffusion.

Figure 10. Pre- and postoperative images of a platysmaplasty patient generated by Midjourney.

Figure 11. Pre- and postoperative images of a platysmaplasty patient generated by Leonardo.

Figure 12. Pre- and postoperative images of a platysmaplasty patient generated by Stable Diffusion.

Statistical analysis

In our study, we assessed the realism and clinical value of 3 AI models, as presented in Figure 13 and the Table. We used ANOVA to evaluate these factors, resulting in a P value of less than .01 for realism and a P value of .38 for clinical value. These findings indicated that there was no significant difference in the clinical value of the models. However, it revealed a significant variation in their levels of realism. Further examination via the Tukey HSD test showed a significant difference between Midjourney and Stable Diffusion (P < .01), emphasizing Midjourney's predominance in this area. The study did not find any other significant variations in realism among the models, with P values of .1 for Midjourney vs Leonardo and .2 for Leonardo vs Stable Diffusion.

Figure 13. Comparative performance evaluation of Midjourney, Leonardo, and Stable Diffusion on the main categories of realism and clinical value, including their respective subcategories.

This study also evaluated the overall performance of each AI model across the 11 metrics, with the observed results (mean ± SD) outlined in Figure 13 and the Table. ANOVA highlighted significant variations in size and volume accuracy (P = .02), anatomical correctness (P = .01), age simulation (P < .01), color fidelity (P < .01), and texture mapping (P < .01) between the models. Subsequent Tukey HSD tests showed notable differences between Stable Diffusion vs Leonardo and Midjourney in anatomical correctness (P = .04 and P = .01, respectively), age simulation (both P < .01), and texture mapping (P = .04 and P < .01, respectively), indicating Stable Diffusion's inferiority in these key realism areas. Additionally, Midjourney outperformed Leonardo and Stable Diffusion in color fidelity (P = .02 and P < .01, respectively), highlighting its superiority in this realism domain. Lastly, Tukey HSD identified a significant difference only between Leonardo and Stable Diffusion in size and volume accuracy (P = .02), showcasing Leonardo's superiority in this specific realism aspect.

Additionally, we assessed the performance (mean ± SD) for each evaluation metric across the analyzed AI models, as detailed in Figure 14. ANOVA testing showed significant differences in the mean scores across metrics, with a P value of less than .01. Further detailed analysis via the Tukey HSD post-hoc test (Figure 15) indicated that the metric for the prediction of healing and scarring consistently scored lower than the others, with a P value of less than .01; the only exception was when compared with texture mapping, which had a P value of .049. Additionally, the Tukey HSD test highlighted that the metric for age simulation accuracy significantly outperformed some of the other metrics, including those for color fidelity, texture mapping, shadow and lighting consistency, prediction of postoperative results, surgical relevance, and the prediction of healing and scarring (Figure 16).

Figure 14. Average performance scores (± SD) for each evaluation metric across all 3 AI models. Error bars denote the standard deviations, illustrating the performance variability.

Figure 15. Tukey Honestly Significant Difference (HSD) post-hoc analysis: healing and scarring prediction comparisons. This chart shows P values for comparisons between healing and scarring prediction and 10 other metrics. The red dashed line indicates the significance threshold (P = .05); bars crossing this line show nonsignificant differences.

Figure 16. Tukey Honestly Significant Difference (HSD) post-hoc Analysis: age simulation accuracy comparisons. This chart shows P values for comparisons between age simulation accuracy and 10 other metrics. The red dashed line indicates the significance threshold (P = .05); bars crossing this line show nonsignificant differences.

Additional results

Feedback from the experts also pointed out instances of the uncanny valley effect, a phenomenon wherein images that are nearly, but not quite, lifelike create a sense of unease.

Discussion

The use of AI in generating before-and-after images for facial aesthetic surgery is an emerging area that blends cutting-edge technology with surgical practice. It holds great promise for enhancing patient care and the planning of surgeries. This study explored AI’s capability to create images that are both lifelike and surgically useful for facial aesthetic surgery, elucidating its potential impact on patient expectations, surgical outcomes, and decision-making processes.

In this study, the AI models Midjourney, Leonardo, and Stable Diffusion struggled to produce images that were both realistic and clinically relevant. This highlights a gap between AI's technological advancements and its practical application in a real-life clinical setting. Therefore, the effectiveness of these AI models in aiding surgical planning remains a topic of debate. Nevertheless, it is important to mention that, when the AI models' performances in each of the 11 criteria were compared against each other’s, Stable Diffusion produced the least realistic images. However, the performance of the other AI models was still not convincing enough to warrant their use in facial cosmetic surgery clinics.

Moreover, the metric for healing and scarring prediction, a critical factor for surgical planning and patient education, significantly underperformed compared with all of the other metrics, while the metric for correct simulation of age significantly outperformed most of the other metrics. This contrast underscores a critical need for improvement in predicting healing outcomes, as this is a topic of immense importance to patients and surgeons.

While AI-generated images provide valuable visual aids for predicting surgical outcomes, significant discrepancies exist between these predictions and actual postoperative results. Often, the after-images portray skin tone and texture as unrealistically flawless and not accurately reflecting the natural recovery process, which includes bruising, swelling, and temporary discoloration, potentially creating unrealistic patient expectations. Additionally, the AIs’ depiction of lighting and shadows can be inconsistent, sometimes dramatically enhancing the perceived improvement; consistent lighting is crucial for realistic comparisons.

Some observations were also made during the evaluation that suggest differences between AI-generated images and those typically produced by non-AI tools. For example, in Figure 4, the AI-generated postoperative genioplasty image not only advanced the chin but also appeared to alter the lower lip position and introduce subtle changes in the lower facial contour. Similarly, other figures, such as Figure 10 for platysmaplasty, seemed to include additional surgical modifications beyond what was described in the procedural headings. The AI-generated postoperative image not only shows the expected reduction in neck sagging, but also appears to have altered the contour of the jawline and possibly advanced the lower face. These changes suggest that the AI may have applied additional enhancements, such as tightening the skin along the jawline or subtly lifting the lower face, which were not explicitly part of the described surgical procedure. Additionally, other images, such as those in Figure 7 and Figure 11, exhibited changes to adjacent facial structures that were not the focus of the primary surgery, such as alterations in the nasal tip or the appearance of ancillary procedures like genioplasty in conjunction with rhinoplasty or platysmaplasty. In Figure 3, which was intended to depict the results of an otoplasty, the AI-generated postoperative image not only shows the expected repositioning of the ears but also appears to have altered the shape of the chin, transitioning it from a wider base to a more pointed, narrow shape. The evaluators noted that these changes were generally perceived as unrealistic and unaligned with the expected outcomes of the described procedures. The alterations were judged as drawbacks because they introduced elements that could potentially mislead patients or create unrealistic expectations regarding the results of their surgery. These discrepancies suggest that, while AI tools are capable of generating detailed and realistic images, they may introduce unintended alterations that go beyond the specific surgical changes intended. This raises important considerations regarding the interpretability and reliability of AI-generated images compared with established non-AI methods. Future research should focus on refining AI algorithms to ensure that they more accurately reflect the specific surgical interventions described without introducing extraneous changes, and should also include a direct comparison between AI-generated images and those produced by non-AI surgical simulation tools to better understand these differences; such a comparison would help determine the relative accuracy and clinical utility of AI-generated images in comparison to more traditional methods, particularly in terms of how accurately they reflect the surgical procedures being simulated.

Another key point is that the transition between pre- and postoperative images appeared smooth, yet these images often lacked the nuanced anatomical and healing changes expected post-surgery. For instance, in genioplasty, noticeable chin advancements could benefit from more detailed changes in the surrounding soft tissue and jawline contour, affecting overall facial harmony. Otoplasty images showed significant ear repositioning but appeared too symmetrical, overlooking individual patient variability. In rhinoplasty, the subtle reshaping of nasal structures such as the alar base and nostrils was oversimplified, resulting in overly symmetrical nostrils, whereas realistic results usually include slight asymmetries and individual anatomical variations. Platysmaplasty examples showed notable neck sagging reduction, but the skin texture improvement appeared overly optimistic and idealized, with the AI excessively smoothing out the skin and ignoring realistic postoperative texture and potential residual lines or minor asymmetries.

The origin and confidentiality of the data used to train these neural networks are also worth considering. The training data might have included anatomical images, but likely not in high enough quantities to ensure medically accurate predictions. This situation underscores the importance of thorough testing of AI tools, challenging the public’s growing assumption that they are infallible. This also suggests that the development of AI models for these types of surgery should involve collaboration with experienced surgeons to align the technology with clinical needs.

Future research should also aim to incorporate dynamic assessments, such as videos, into surgical evaluation. These evaluations could potentially capture nerve injuries and other motion-related outcomes that AI programs might predict, providing a more comprehensive understanding of AI's capabilities and limitations in predicting surgical outcomes.

Another important area for future investigation is to include a direct comparison between AI-generated results and those generated by expert surgeons using existing preoperative surgical planning platforms. By directly comparing AI-generated images to expert-generated ones, we can better assess the realism and clinical accuracy of AI tools. This comparison will also help identify areas where AI models need further refinement to meet the standards set by human expertise.

Ethical considerations

The integration of AI in predicting surgical outcomes, especially in aesthetic surgery, raises several ethical considerations that must be addressed to ensure responsible use and patient safety. First, using AI to predict surgical outcomes requires a clear and thorough informed consent process. Patients must understand that AI-generated images are based on predictive models and may not accurately represent individual outcomes. Emphasizing the experimental nature of these predictions is crucial to avoid creating unrealistic expectations. Patients should be informed of the potential discrepancies between AI predictions and actual surgical results, ensuring they have a realistic understanding of the technology's capabilities and limitations.

Data privacy and confidentiality are also critical when using AI in medical imaging. It is imperative to ensure that any patient data used in training AI models is anonymized and handled in compliance with data protection regulations such as the General Data Protection Regulation (GDPR) in Europe and the Health Insurance Portability and Accountability Act (HIPAA) in the United States. Protecting patient data from unauthorized access and potential misuse is a fundamental ethical obligation.

The accuracy and reliability of AI predictions are major ethical concerns. AI models must undergo rigorous validation to ensure they provide clinically relevant and accurate predictions. This involves continuous testing against actual surgical outcomes and updating the models as new data becomes available. Overreliance on unvalidated AI predictions can lead to poor surgical planning and unsatisfactory patient outcomes. Therefore, AI tools should complement, rather than replace, professional clinical judgment.

Moreover, AI models are only as good as the data on which they are trained. There is a risk of inherent bias in AI predictions if the training data is not representative of the diverse patient population. This can lead to skewed predictions that may not be applicable to all demographic groups. It is essential to ensure that AI models are trained on diverse datasets that include various ages, genders, ethnicities, and body types to provide fair and equitable predictions.

AI-generated images can also significantly influence patient expectations and decision-making. There is a potential risk that patients might place undue confidence in AI predictions, leading to disappointment if the actual surgical results differ. It is crucial for surgeons to manage these expectations by clearly communicating the limitations of AI-generated images and emphasizing the variability of individual surgical outcomes. Surgeons should use AI predictions as a tool to facilitate discussions rather than as definitive forecasts.

Finally, in addition to technical and clinical considerations, potential ethical issues could extend beyond patient consent and data protection to include concerns about equity and access. It is crucial that the benefits of AI technology are distributed broadly to avoid exacerbating health disparities. Addressing practical challenges, such as integrating AI with electronic health records and providing medical professionals with AI training, requires a cooperative effort among various stakeholders in the healthcare ecosystem.

Limitations

Although this research represents an initial exploration into the role of generative AI in several types of facial plastic surgery, it faces several limitations. First, using ChatGPT-4 for prompt engineering, though revolutionary, introduces variability in the quality and detail of prompts, potentially affecting AI-image generation. Second, the evaluation method, though extensive, could be subjectively interpreted even by experienced surgeons. Lastly, the small group of evaluators in this study may not capture the wide range of perspectives in the facial aesthetic surgery field. Additionally, by focusing on only 3 AI platforms, we might not have covered the entire spectrum of available, and potentially superior, technologies. These challenges highlight the need for further studies to develop standardized prompt creation methods, expand the pool of evaluators, and extend the analysis to encompass more AI technologies.

The observation of the uncanny valley phenomenon in this study also deserves attention for its possible adverse effects on patient expectations and satisfaction.4 It is essential for health care practices to recognize and mitigate the negative impacts of AI-generated images to prevent increased preoperative anxiety among patients.

In this study, the evaluation focused exclusively on AI-generated images without direct comparison to traditional non-AI surgical simulation tools, such as those involving optical scanners or other advanced imaging technologies. While the evaluators had experience with various non-AI simulation tools commonly used in surgical planning and education, a systematic comparison between these tools and the AI-generated images was not within the scope of this study.

In addition, although the evaluation of AI-generated images was conducted by both consultants and trainees, a comparative analysis between these 2 groups was not performed as part of this study. The decision to focus on overall evaluation metrics without distinguishing between the experience levels of the evaluators was made to provide a general assessment of the AI tools' performance. However, we recognize that differences in experience may influence the perception of image realism and clinical value. Future studies could benefit from including a stratified analysis to explore potential variations in assessments between consultants and trainees, which could provide further insights into the clinical applicability of AI-generated images in surgical planning.

Conclusions

While AI's capability to generate pre- and postsurgery images shows promise, there remains a clear need for more precise and clinically useful tools in this field. The study advocates for closer collaboration between technologists, engineers, and experienced surgeons to fully realize the potential of AI in this space. Future research is vital to ensure the safe and effective use of AI and to build the foundation for advances in the accuracy, predictive power, prognostic capability, and clinical utility of AI-generated images in the field of facial plastic surgery.

Acknowledgments

Authors: Arsany Yassa, BA1; Arya Akhavan, MD1;Solina Ayad2-3;Olivia Ayad, BS, MSc2,4,5;Anthony Colon, MD1; Ashley Ignatiuk, MD, MSC, FRCSC1

Affiliations: 1Division of Plastic and Reconstructive Surgery, Rutgers New Jersey Medical School, Newark, New Jersey; 2Founder and Researcher, Arclivia. A platform for innovation & research in AI integration, Bayonne, NJ, USA; 3 Faculty of Engineering, The British University in Egypt – BUE, El Sherouk City, Cairo, Egypt; 4Department of Architecture and Territory, Mediterranean University of Reggio Calabria, Calabria, Italy; 5Department of Landscape Architecture, International Credit Hours Engineering Programs of Ain Shams University, Cairo, Egypt

Correspondence: Ashley Ignatiuk, MD, MSC, FRCSC, Department of Plastic Surgery, Rutgers New Jersey Medical School, Doctor's Office Center (DOC), 90 Bergen Street, Suite 7200, Newark, NJ 07101, USA. Email: ai253@njms.rutgers.edu

Ethics: The study strictly avoided the use of real patient or animal data or imagery, thus bypassing the need for approval from the institutional review board (IRB) of Rutgers New Jersey Medical School.

Disclosure: The authors have no financial or other conflicts of interest to disclose.

References

1. Iansiti M, Lakhani KR. Competing in the Age of AI: Strategy and Leadership When Algorithms and Networks Run the World. Harvard Business Review Press; 2020.

2. Plathottam SJ, Rzonca A, Lakhnori R, Iloeje CO. A review of artificial intelligence applications in manufacturing operations. J Adv Manuf Process. 2023;5(3):e10159. doi:10.1002/amp2.10159

3. Marr B. Artificial Intelligence in Practice: How 50 Successful Companies Used AI and Machine Learning to Solve Problems. Wiley; 2019.

4. MacDorman KF, Green RD, Ho CC, Koch CT. Too real for comfort? Uncanny responses to computer generated faces. Comput Human Behav. 2009;25(3):695-710. doi:10.1016/j.chb.2008.12.026

5. Mahmood H, Shaban M, Indave BI, Santos-Silva AR, Rajpoot N, Khurram SA. Use of artificial intelligence in diagnosis of head and neck precancerous and cancerous lesions: a systematic review. Oral Oncol. 2020;110:104885. doi:10.1016/j.oraloncology.2020.104885

6. Bi WL, Hosny A, Schabath MB, et al. Artificial intelligence in cancer imaging: clinical challenges and applications. CA Cancer J Clin. 2019;69(2):127-157. doi:10.3322/caac.21552

7. Sheth D, Giger ML. Artificial intelligence in the interpretation of breast cancer on MRI. J Magn Reson Imaging. 2020;51(5):1310-1324. doi:10.1002/jmri.26878

8. Haug CJ, Drazen JM. Artificial intelligence and machine learning in clinical medicine, 2023. N Engl J Med. 2023;388(13):1201-1208. doi:10.1056/NEJMra2302038

9. Al Kuwaiti A, Nazer K, Al-Reedy A, et al. A review of the role of artificial intelligence in healthcare. J Pers Med. 2023;13(6):951. doi:10.3390/jpm13060951

10. Gu J, Gao C, Wang L. The evolution of artificial intelligence in biomedicine: bibliometric analysis. JMIR AI. 2023;2:e45770. doi:10.2196/45770

11. Heger KA, Waldstein SM. Artificial intelligence in retinal imaging: current status and future prospects. Expert Rev Med Devices. 2024;21(1-2):73-89. doi:10.1080/17434440.2023.2294364

12. Iaia V. To be, or not to be… original under copyright law, that is (one of) the main questions concerning AI-produced works. GRUR International. 2022;71(9):793-812. doi:10.1093/grurint/ikac087

13. AlDahoul N, Hong J, Varvello M, Zaki Y. Exploring the potential of generative AI for the world wide web. arXiv. Preprint posted online October 26, 2023. doi:10.48550/arXiv.2310.17370

14. Eng JS. Can aesthetic surgeons be true artists? Aesthetic Plast Surg. 2009;33(2):137-138; discussion 139. doi:10.1007/s00266-008-9291-y

15. Turney BW. Anatomy in a modern medical curriculum. Ann R Coll Surg Engl. 2007;89(2):104-107. doi:10.1308/003588407X168244

16. Netter FH. Atlas of human anatomy. 6th ed. Saunders; 2014.

17. Göring S, Rao RRR, Merten R, Raake A. Analysis of appeal for realistic AI-generated photos. IEEE Access. 2023;11. doi:10.1109/ACCESS.2023.3267968

18. Janis JE, Rohrich RJ, Gutowski KA. Otoplasty. Plast Reconstr Surg. 2005;115(4):60e-72e. doi:10.1097/01.prs.0000156218.93855.c9

19. Gola R. Functional and esthetic rhinoplasty. Aesthetic Plast Surg. 2003;27(5):390-396. doi:10.1007/s00266-003-2136-9

20. Lecointre F, Nicoletis C. The contribution of genioplasty in esthetic surgery of the face. Article in French. Ann Chir Plast Esthet. 1989;34(5):395-401.

21. Abadi M, Pour OB. Genioplasty. Facial Plast Surg. 2015;31(5):513-522. doi:10.1055/s-0035-1567882

22. Ramírez OM. Advanced considerations determining procedure selection in cervicoplasty. Part one: anatomy and aesthetics. Clin Plast Surg. 2008;35(4):679-690, viii. doi:10.1016/j.cps.2008.05.002